G³AN: Disentangling Appearance and Motion for Video Generation

In CVPR 2020

Yaohui Wang¹'² Piotr Biliński³ François Brémond¹'² Antitza Dantcheva¹'²

¹ Inria ² Université Côte d'Azur ³ University of Warsaw

[PDF] [Code] [Bibtex]

Abstract

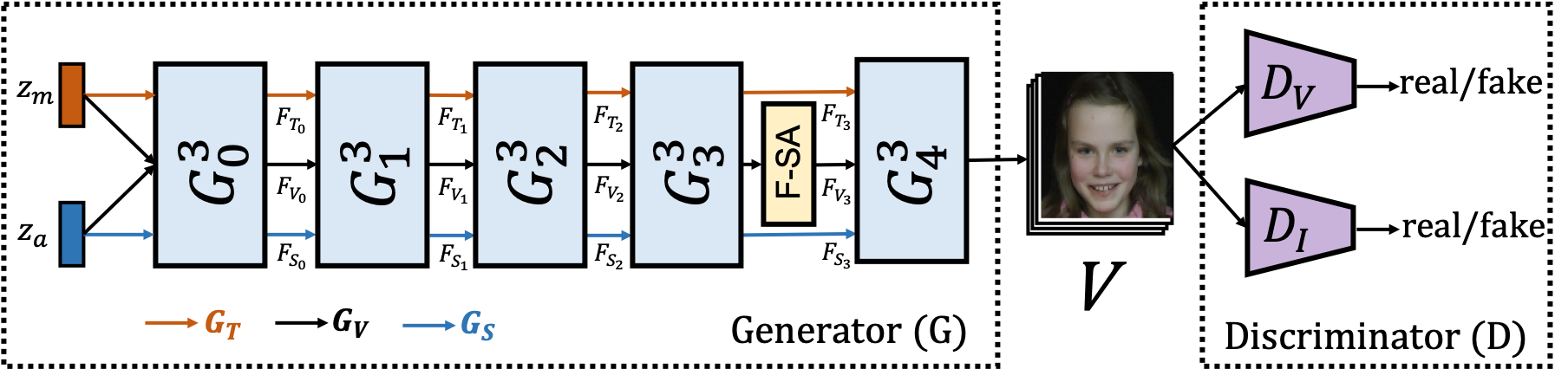

Creating realistic human videos introduces the challenge of being able to simultaneously generate both appearance, as well as motion. To tackle this challenge, we introduce G³AN, a novel spatio-temporal generative model, which seeks to capture the distribution of high dimensional video data and to model appearance and motion in disentangled manner. The latter is achieved by decomposing appearance and motion in a three-stream Generator, where the main stream aims to model spatio-temporal consistency, whereas the two auxiliary streams augment the main stream with multi-scale appearance and motion features, respectively. An extensive quantitative and qualitative analysis shows that our model systematically and significantly outperforms state-of-the-art methods on the facial expression datasets MUG and UvA-NEMO, as well as the Weizmann and UCF101 datasets on human action. Additional analysis on the learned latent representations confirms the successful decomposition of appearance and motion.

Demos

Unconditional & Conditional Generation

Multi-length Generation

Acknowledgements

This work is supported by the French Government (National Research Agency, ANR), under Grant ANR-17-CE39-0002.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright.